Tag: Linux

Setting up a guest network with Unifi APs

I’ve been pretty happy with the Unifi wifi access points I picked up a few months back, but one of the things I hadn’t managed to replicate over my old setup was a guest wifi network.

If I went all-in and bought a Unifi router, this would probably be fairly trivial to set up. But I wanted to build on the equipment I already had for now. Looking at some old docs, I’d need to get trunked VLAN traffic to the APs to separate the main and guest networks.

Running the UniFi Controller under LXD

A while back I bought some UniFi access points. I hadn’t gotten round to setting up the Network Controller software to properly manage them though, so thought I’d dig into setting that up.

Building IoT projects with Ubuntu Core talk

Last week I gave a talk at Perth Linux Users Group about building IoT projects using Ubuntu Core and Snapcraft. The video is now available online. Unfortunately there were some problems with the audio setup leading to some background noise in the video, but it is still intelligible:

The slides used in the talk can be found here.

Performing mounts securely on user owned directories

While working on a feature for snapd, we had a need to perform a "secure bind mount". In this context, "secure" meant:

- The source and/or target of the mount is owned by a less privileged user.

- User processes will continue to run while we're performing the mount (so solutions that involve suspending all user processes are out).

- While we can't prevent the user from moving the mount point, they should not be able to trick us into mounting to locations they don't control (e.g. by replacing the path with a symbolic link).

The main problem is that the mount system call uses string path names to

identify the mount source and target. While we can perform checks on the

paths before the mounts, we have no way to guarantee that the paths

don't point to another location when we move on to the mount() system

call: a classic time of check to time of

use race

condition.

ThinkPad Infrared Camera

One of the options available when configuring the my ThinkPad was an Infrared camera. The main selling point being "Windows Hello" facial recognition based login. While I wasn't planning on keeping Windows on the system, I was curious to see what I could do with it under Linux. Hopefully this is of use to anyone else trying to get it to work.

The camera is manufactured by Chicony Electronics (probably a CKFGE03 or similar), and shows up as two USB devices:

Tag: Networking

Setting up a guest network with Unifi APs

I’ve been pretty happy with the Unifi wifi access points I picked up a few months back, but one of the things I hadn’t managed to replicate over my old setup was a guest wifi network.

If I went all-in and bought a Unifi router, this would probably be fairly trivial to set up. But I wanted to build on the equipment I already had for now. Looking at some old docs, I’d need to get trunked VLAN traffic to the APs to separate the main and guest networks.

Running the UniFi Controller under LXD

A while back I bought some UniFi access points. I hadn’t gotten round to setting up the Network Controller software to properly manage them though, so thought I’d dig into setting that up.

Tag: UniFi

Setting up a guest network with Unifi APs

I’ve been pretty happy with the Unifi wifi access points I picked up a few months back, but one of the things I hadn’t managed to replicate over my old setup was a guest wifi network.

If I went all-in and bought a Unifi router, this would probably be fairly trivial to set up. But I wanted to build on the equipment I already had for now. Looking at some old docs, I’d need to get trunked VLAN traffic to the APs to separate the main and guest networks.

Running the UniFi Controller under LXD

A while back I bought some UniFi access points. I hadn’t gotten round to setting up the Network Controller software to properly manage them though, so thought I’d dig into setting that up.

Tag: Ubuntu

Running the UniFi Controller under LXD

A while back I bought some UniFi access points. I hadn’t gotten round to setting up the Network Controller software to properly manage them though, so thought I’d dig into setting that up.

Exploring Github Actions

To help keep myself honest, I wanted to set up automated test runs on a few personal projects I host on Github. At first I gave Travis a try, since a number of projects I contribute to use it, but it felt a bit clunky. When I found Github had a new CI system in beta, I signed up for the beta and was accepted a few weeks later.

While it is still in development, the configuration language feels lean and powerful. In comparison, Travis's configuration language has obviously evolved over time with some features not interacting properly (e.g. matrix expansion only working on the first job in a workflow using build stages). While I've never felt like I had a complete grasp of the Travis configuration language, the single page description of Actions configuration language feels complete.

Building IoT projects with Ubuntu Core talk

Last week I gave a talk at Perth Linux Users Group about building IoT projects using Ubuntu Core and Snapcraft. The video is now available online. Unfortunately there were some problems with the audio setup leading to some background noise in the video, but it is still intelligible:

The slides used in the talk can be found here.

Ubuntu Desktop

When the Ubuntu Phone project was cancelled, I moved to the desktop team. The initial goal for team was to bring up a GNOME 3 based desktop for the Ubuntu 17.10 release that would be familiar to both Ubuntu users coming from the earlier Unity desktop, and users of “vanilla” GNOME 3.

Performing mounts securely on user owned directories

While working on a feature for snapd, we had a need to perform a "secure bind mount". In this context, "secure" meant:

- The source and/or target of the mount is owned by a less privileged user.

- User processes will continue to run while we're performing the mount (so solutions that involve suspending all user processes are out).

- While we can't prevent the user from moving the mount point, they should not be able to trick us into mounting to locations they don't control (e.g. by replacing the path with a symbolic link).

The main problem is that the mount system call uses string path names to

identify the mount source and target. While we can perform checks on the

paths before the mounts, we have no way to guarantee that the paths

don't point to another location when we move on to the mount() system

call: a classic time of check to time of

use race

condition.

ThinkPad Infrared Camera

One of the options available when configuring the my ThinkPad was an Infrared camera. The main selling point being "Windows Hello" facial recognition based login. While I wasn't planning on keeping Windows on the system, I was curious to see what I could do with it under Linux. Hopefully this is of use to anyone else trying to get it to work.

The camera is manufactured by Chicony Electronics (probably a CKFGE03 or similar), and shows up as two USB devices:

Ubuntu Phone and Unity

At the end of 2012, I moved from Ubuntu One to the Unity API Team at Canonical. This team was responsible for various services that supported the Unity desktop shell: most noticeably the search functionality. This work initially focused on the Unity 7 desktop shipping with Ubuntu, but then changed focus to the Unity 8 rewrite used by the Ubuntu Phone project.

Ubuntu One

Ubuntu One was a set of online services provided by Canonical for Ubuntu users. It provided cloud hosted storage for files and structured data, synchronised to the user’s local machine. The Ubuntu One service was discontinued in 2014.

u1ftp: a demonstration of the Ubuntu One API

One of the projects I've been working on has been to improve aspects of the Ubuntu One Developer Documentation web site. While there are still some layout problems we are working on, it is now in a state where it is a lot easier for us to update.

I have been working on updating our authentication/authorisation documentation and revising some of the file storage documentation (the API used by the mobile Ubuntu One clients). To help verify that the documentation was useful, I wrote a small program to exercise those APIs. The result is u1ftp: a program that exposes a user's files via an FTP daemon running on localhost. In conjunction with the OS file manager or a dedicated FTP client, this can be used to conveniently access your files on a system without the full Ubuntu One client installed.

Launchpad code scanned by Ohloh

Today Ohloh finished importing the Launchpad source code and produced the first source code analysis report. There seems to be something fishy about the reported line counts (e.g. -3,291 lines of SQL), but the commit counts and contributor list look about right. If you're interested in what sort of effort goes into producing an application like Launchpad, then it is worth a look.

Comments:

e -

Have you seen the perl language?

More Rygel testing

In my last post, I said I had trouble getting Rygel's tracker backend to function and assumed that it was expecting an older version of the API. It turns out I was incorrect and the problem was due in part to Ubuntu specific changes to the Tracker package and the unusual way Rygel was trying to talk to Tracker.

The Tracker packages in Ubuntu remove the D-Bus service activation file for the "org.freedesktop.Tracker" bus name so that if the user has not chosen to run the service (or has killed it), it won't be automatically activated. Unfortunately, instead of just calling a Tracker D-Bus method, Rygel was trying to manually activate Tracker via a StartServiceByName() call. This would fail even if Tracker was running, hence my assumption that it was a tracker API version problem.

Ubuntu packages for Rygel

I promised Zeeshan that I'd have a look at his Rygel UPnP Media Server a few months back, and finally got around to doing so. For anyone else who wants to give it a shot, I've put together some Ubuntu packages for Jaunty and Karmic in a PPA here:

Most of the packages there are just rebuilds or version updates of existing packages, but the Rygel ones were done from scratch. It is the first Debian package I've put together from scratch and it wasn't as difficult as I thought it might be. The tips from the "Teach me packaging" workshop at the Canonical All Hands meeting last month were quite helpful.

django-openid-auth

Last week, we released the source code to django-openid-auth. This is a small library that can add OpenID based authentication to Django applications. It has been used for a number of internal Canonical projects, including the sprint scheduler Scott wrote for the last Ubuntu Developer Summit, so it is possible you've already used the code.

Rather than trying to cover all possible use cases of OpenID, it focuses on providing OpenID Relying Party support to applications using Django's django.contrib.auth authentication system. As such, it is usually enough to edit just two files in an existing application to enable OpenID login.

Streaming Vorbis files from Ubuntu to a PS3

One of the nice features of the PlayStation 3 is the UPNP/DLNA media renderer. Unfortunately, the set of codecs is pretty limited, which is a problem since most of my music is encoded as Vorbis. MediaTomb was suggested to me as a server that could transcode the files to a format the PS3 could understand.

Unfortunately, I didn’t have much luck with the version included with Ubuntu 8.10 (Intrepid), and after a bit of investigation it seems that there isn’t a released version of MediaTomb that can send PCM audio to the PS3. So I put together a package of a subversion snapshot in my PPA which should work on Intrepid.

Prague

I arrived in Prague yesterday for the Ubuntu Developer Summit. Including time spent in transit in Singapore and London, the flights took about 30 hours.

As I was flying on BA, I got to experience Heathrow Terminal 5. It wasn't quite as bad as some of the horror stories I'd heard. There were definitely aspects that weren't forgiving of mistakes. For example, when taking the train to the "B" section there was a sign saying that if you accidentally got on the train when you shouldn't have it would take 40 minutes to get back to the "A" section.

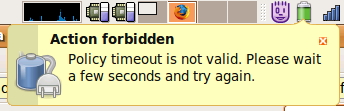

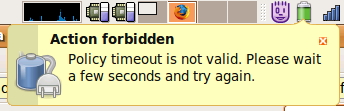

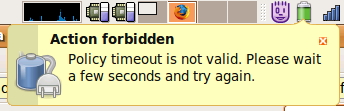

Weird GNOME Power Manager error message

Since upgrading to Ubuntu Gutsy I've occasionally been seeing the following notification from GNOME Power Manager:

I'd usually trigger this error by unplugging the AC adapter and then picking suspend from GPM's left click menu.

My first thought on seeing this was "What's a policy timeout, and why

is it not valid?" followed by "I don't remember setting a policy

timeout". Looking at bug

492132 I found a

pointer to the policy_suppression_timeout gconf value, whose

description gives a bit more information.

On the way to Boston

I am at Narita Airport at the moment, on the way to Boston for some of the meetings being held during UDS. It'll be good to catch up with everyone again.

Hopefully this trip won't be as eventful as the previous one to Florida :)

Schema Generation in ORMs

When Storm was released, one of the comments made was that it did not include the ability to generate a database schema from the Python classes used to represent the tables while this feature is available in a number of competing ORMs. The simple reason for this is that we haven't used schema generation in any of our ORM-using projects.

Furthermore I'd argue that schema generation is not really appropriate for long lived projects where the data stored in the database is important. Imagine developing an application along these lines:

Upgrading to Ubuntu Gutsy

I got round to upgrading my desktop system to Gutsy today. I'd upgraded my laptop the previous week, so was not expecting much in the way of problems.

I'd done the original install on my desktop back in the Warty days, and the root partition was a bit too small to perform the upgrade. As there was a fair bit of accumulated crud, I decided to do a clean install. Things mostly worked, but there were a few problems, which I detail below:

Canonical Shop Open

The new Canonical Shop was opened recently which allows you to buy anything from Ubuntu tshirts and DVDs up to a 24/7 support contract for your server.

One thing to note is that this is the first site using our new Launchpad single sign-on infrastructure. We will be rolling this out to other sites in time, which should give a better user experience to the existing shared authentication system currently in place for the wikis.

gnome-vfs-obexftp 0.4

It hasn't been long since the last gnome-vfs-obexftp release, but I thought it'd be good to get these fixes out before undertaking more invasive development. The new version is available from:

The highlights of this release are:

- If the phone does not provide free space values in the OBEX capability object, do not report this as zero free space. This fixes Nautilus file copy behaviour on a number of Sony Ericsson phones.

- Fix date parsing when the phone returns UTC timestamps in the folder listings.

- Add some tests for the capability object and folder listing XML parsers. Currently has sample data for Nokia 6230, Motorola KRZR K1, and Sony K800i, Z530i and Z710i phones.

These fixes should improve the user experience for owners of some Sony Ericsson phones by letting them copy files to the phone, rather than Nautilus just telling them that there is no free space. Unfortunately, if there isn't enough free space you'll get an error part way through the copy. This is the best that can be done with the information provided by the phone.

Investigating OBEX over USB

I've had a number of requests for USB support in gnome-vfs-obexftp. At

first I didn't have much luck talking to my phone via USB. Running the

obex_test utility from OpenOBEX gave the following results:

$ obex_test -u

Using USB transport, querying available interfaces

Interface 0: (null)

Interface 1: (null)

Interface 2: (null)

Use 'obex_test -u interface_number' to run interactive OBEX test client

Trying to talk via any of these interface numbers failed. After reading

up a bit, it turned out that I needed to add a udev rule to give

permissions on my phone. After doing so, I got a better result:

gnome-vfs-obexftp 0.3

I've just released a new version of gnome-vfs-obexftp, which includes the features discussed previously. It can be downloaded from:

The highlights of the release include:

- Sync osso-gwobex and osso-gnome-vfs-extras changes from Maemo Subversion.

- Instead of asking hcid to set up the RFCOMM device for communication, use an RFCOMM socket directly. This is both faster and doesn't require enabling experimental hcid interfaces. Based on work from Bastien Nocera.

- Improve free space calculation for Nokia phones with multiple memory types (e.g. phone memory and a memory card). Now the free space for the correct memory type for a given directory should be returned. This fixes various free-space dependent operations in Nautilus such as copying files.

Any bug reports should be filed in Launchpad at:

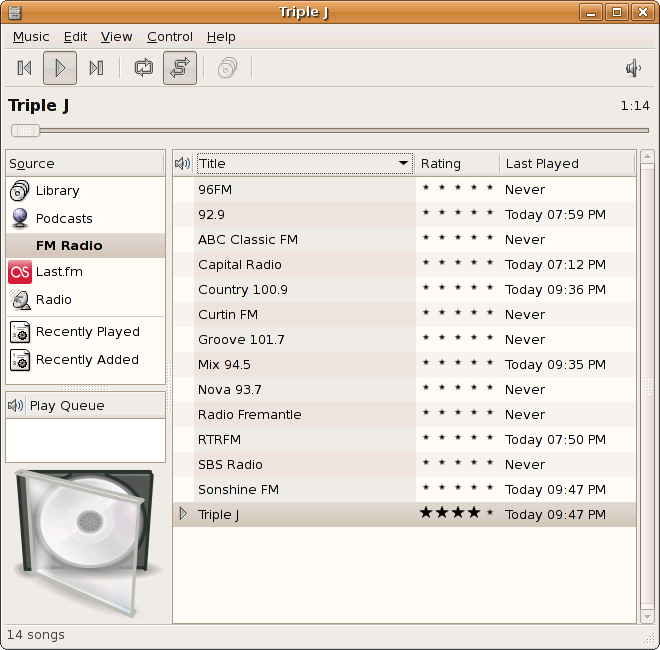

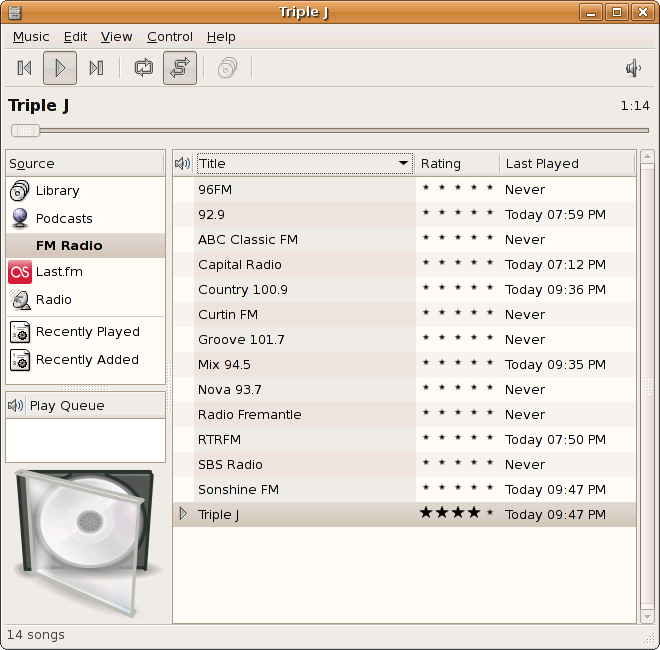

FM Radio in Rhythmbox – The Code

Previously, I posted about the FM radio plugin I was working on. I just posted the code to bug 168735. A few notes about the implementation:

- The code only supports Video4Linux 2 radio tuners (since that’s the interface my device supports, and the V4L1 compatibility layer doesn’t work for it). It should be possible to port it support both protocols if someone is interested.

- It does not pass the audio through the GStreamer pipeline. Instead, you need to configure your mixer settings to pass the audio through (e.g. unmute the Line-in source and set the volume appropriately). It plugs in a GStreamer source that generates silence to work with the rest of the Rhythmbox infrastructure. This does mean that the volume control and visualisations won’t work

- No properties dialog yet. If you want to set titles on the stations,

you’ll need to edit

rhythmdb.xmldirectly at the moment. - The code assumes that the radio device is

/dev/radio0.

Other than that, it all works quite well (I've been using it for the last few weeks).

FM Radio in Rhythmbox

I've been working on some FM radio support in Rhythmbox in my spare time. Below is screenshot

At the moment, the basic tuning and mute/unmute works fine with my

DSB-R100. I don't have any UI for adding/removing stations at the

moment though, so it is necessary to edit

~/.gnome2/rhythmbox/rhythmdb.xml to add them.

Comments:

Joel -

This feature would truly be a welcome addition!

I'm especially pleased it's being developed by a fellow Australian! (If the radio stations are any indication)

FM Radio Tuners in Feisty

I upgraded to Feisty about a month or so ago, and it has been a nice improvement so far. One regression I noticed though was that my USB FM radio tuner had stopped working (or at least, Gnomeradio could no longer tune it).

It turns out that some time between the kernel release found in Edgy and

the one found in Feisty, the dsbr100 driver had been upgraded from the

Video4Linux 1

API to

Video4Linux

2. Now the

driver nominally supports the V4L1 ioctls through the v4l1_compat, but

it doesn't seem to implement enough V4L2 ioctls to make it usable (the

VIDIOCGAUDIO ioctl fails).

Launchpad 1.0 Public Beta

As mentioned in the press release, we've got two new high profile projects using us for bug tracking: The Zope 3 Project and The Silva Content Management System. As part of their migration, we imported all their old bug reports (for Zope 3, and for Silva). This was done using the same import process that we used for the SchoolTool import. Getting this process documented so that other projects can more easily switch to Launchpad is still on my todo list.

UTC+9

Daylight saving started yesterday: the first time since 1991/1992 summer

for Western Australia. The legislation finally passed the upper house on

21st November (12 days before the transition date). The updated

tzdata packages were released on 27th

November (6 days before the transition). So far, there hasn't been an

updated package released for Ubuntu (see bug

72125).

One thing brought up in the Launchpad bug was that not all applications

used the system /usr/share/zoneinfo time zone database. So other

places that might need updating include:

San Francisco

I arrived in San Francisco today for the

Canonical company conference. Seems like a

nice place, and not too cold :). So far I've just gone for a walk

along Fisherman's Wharf for a few hours. There look

On the plane trip, I had a chance to see Last Train to Freo, which I didn't get round to seeing in the cinemas. Definitely worth watching.

Daylight Saving in Western Australia

Like a few other states, Western Australia does not do daylight saving. Recently the state parliament has been discussing a Daylight saving bill. The bill is now before the Legislative Council (the upper house). If the bill gets passed, there will be a 3 year trial followed by a referendum to see if we want to continue.

I hadn't been paying too much attention to it, and had assumed they would be talking about starting the trial next year. But it seems they're actually talking about starting it on 3rd December. So assuming the bill gets passed, there will be less than a month til it starts.

Building obex-method

I published a Bazaar branch of the Nautilus obex method here:

http://bazaar.launchpad.net/~jamesh/+junk/gnome-vfs-obexftp

This version works with the hcid daemon included with Ubuntu Edgy,

rather than requiring the btcond daemon from

Maemo.

Some simple instructions on building it:

-

Download and build the

osso-gwobexlibrary:svn checkout https://stage.maemo.org/svn/maemo/projects/connectivity/osso-gwobex/trunk osso-gwobexThe debian/ directory should work fine to build a package using

debuild. -

Download and build the obex module:

bzr branch http://bazaar.launchpad.net/~jamesh/+junk/gnome-vfs-obexftpThere is no debian packaging for this — just an

autogen.shscript.

Playing Around With the Bluez D-BUS Interface

In my previous entry about using the

Maemo obex-module on the desktop, Johan Hedberg mentioned that

bluez-utils 3.7 included equivalent interfaces to the

osso-gwconnect daemon used by the method. Since then, the copy of

bluez-utils in Edgy has been updated to 3.7, and the necessary

interfaces are enabled in hcid by default.

Before trying to modify the VFS code, I thought I'd experiment a bit

with the D-BUS interfaces via the D-BUS python bindings. Most of the

interesting method calls exist on the org.bluez.Adapter interface. We

can easily get the default adapter with the following code:

OBEX in Nautilus

When I got my new laptop, one of the features it had that my previous one didn't was Bluetooth support. There are a few Bluetooth related utilities for Gnome that let you send and receive SMS messages and a few other things, but a big missing feature is the ability to transfer files to and from the phone easily.

Ideally, I'd be able to browse the phone's file system using Nautilus. Luckily, the Maemo guys have already done the hard work of writing a gnome-vfs module that speaks the OBEX FTP protocol. I had a go at compiling it on my laptop (running Ubuntu Edgy), and you can see the result below:

Ubuntu Bugzilla Migration Comment Cleanup

Earlier in the year, we migrated the bugs from bugzilla.ubuntu.com

over to Launchpad. This process

involved changes to the bug numbers, since the

Launchpad is used for more than just

Ubuntu and already had a number of bugs

reported in the system.

People often refer to other bugs in comments, which both Bugzilla and Launchpad conveniently turn into links. The changed bug numbers meant that the bug references in the comments ended up pointing to the wrong bugs. The bug import was done one bug at a time, so if bug A referred to bug B but bug B hadn't been imported by the time we were importing bug A, then we wouldn't know what bug number it should be referring to.

Ekiga

I've been testing out Ekiga recently, and so far the experience has been a bit hit and miss.

- Firewall traversal has been unreliable. Some numbers (like the SIPPhone echo test) work great. In some cases, no traffic has gotten through (where both parties were behind Linux firewalls). In other cases, voice gets through in one direction but not the other. Robert Collins has some instructions on setting up siproxd which might solve all this though, so I'll have to try that.

- The default display for the main window is a URI entry box and a dial pad. It would make much more sense to display the user's list of contacts here instead (which are currently in a separate window). I rarely enter phone numbers on my mobile phone, instead using the address book. I expect that most VoIP users would be the same, provided that using the address book is convenient.

- Related to the previous point: the Ekiga.net registration service seems to know who is online and who is not. It would be nice if this information could be displayed next to the contacts.

- Ekiga supports multiple sound cards. It was a simple matter of selecting "Logitech USB Headset" as the input and output device on the audio devices page of the preferences to get it to use my headset. Now I hear the ring on my desktop's speakers, but can use the headset for calls.

- It is cool that Ekiga supports video calls, but I have no video camera on my computer. Even though I disabled video support in the preferences, there is still a lot of knobs and whistles in the UI related to video.

Even though there are still a few warts, Ekiga shows a lot of promise. As more organisations provide SIP gateways become available (such as the UWA gateway), this software will become more important as a way of avoiding expensive phone charges as well as a way of talking to friends/colleagues.

Firefox Ligature Bug Followup

Thought I'd post a followup on my previous post since it generated a bit of interest. First a quick summary:

- It is not an Ubuntu Dapper specific bug. With the appropriate combination of fonts and pango versions, it will exhibit itself on other Pango-enabled Firefox builds (it was verified on the Fedora build too).

- It is not a DejaVu bug, although it is one of the few fonts to exhibit the problem. The simple fact is that not many fonts provide ligature glyphs and include the required OpenType tables for them to be used.

- It isn't a Pango bug. The ligatures are handled correctly in normal GTK applications on Dapper. The bug only occurs with Pango >= 1.12, but that is because older versions did not make use of the OpenType tables in the "basic" shaper (used for latin scripts like english).

- The bug only occurs in the Pango backend, but then the non-Pango renderer doesn't even support ligatures. Furthermore, there are a number of languages that can't be displayed correctly with the non-Pango renderer so it is not very appealing.

The firefox bug is only triggered in the slow, manual glyph positioning code path of the text renderer. This only gets invoked if you have non-default letter or word spacing (such as justified text). In this mode, the width of the normal glyph of the first character in the ligature seems to be used for positioning which results in the overlapping text.

Annoying Firefox Bug

Ran into an annoying Firefox bug after upgrading to Ubuntu Dapper. It seems to affect rendering of ligatures.

At this point, I am not sure if it is an Ubuntu specific bug. The current conditions I know of to trigger the bug are:

- Firefox 1.5 (I am using the 1.5.dfsg+1.5.0.1-1ubuntu10 package).

- Pango rendering enabled (the default for Ubuntu).

- The web page must use a font that contains ligatures and use those ligatures. Since the "DejaVu Sans" includes ligatures and is the default "sans serif" font in Dapper, this is true for a lot of websites.

- The text must be justified (e.g. use the "

text-align: justify" CSS rule).

If you view a site where these conditions are met with an affected

Firefox build, you will see the bug: ligature glyphs will be used to

render character sequences like "ffi", but only the advance of the

first character's normal glyph is used before drawing the next glyph.

This results in overlapping glyphs:

London

I've been in London for a bit over a week now at the Launchpad sprint. We've been staying in a hotel near the Excel exhibition centre in Docklands, which has a nice view of the docs and you can see the planes landing at the airport out the windows of the conference rooms.

I met up with James Bromberger (one of the two main organisers of linux.conf.au 2003) on Thursday, which is the first time I've seen him since he left for the UK after the conference.

Launchpad featured on ELER

Launchpad got a mention in the latest Everybody Loves Eric Raymond comic. It is full of inaccuracies though — we use XML-RPC rather than SOAP.

Comments:

opi -

Oh, c'mon. It was quite fun. :-)

Bugzilla to Malone Migration

The Bugzilla migration on Friday went quite well, so we've now got all the old Ubuntu bug reports in Launchpad. Before the migration, we were up to bug #6760. Now that the migration is complete, there are more than 28000 bugs in the system. Here are some quick points to help with the transition:

-

All

bugzilla.ubuntu.comaccounts were migrated to Launchpad accounts with a few caveats:- If you already had a Launchpad account with your bugzilla email address associated with it, then the existing Launchpad account was used.

- No passwords were migrated from Bugzilla, due to differences in the method of storing them. You can set the password on the account at https://launchpad.net/+forgottenpassword.

- If you had a Launchpad account but used a different email to the one on your Bugzilla account, then you now have two Launchpad accounts. You can merge the two accounts at https://launchpad.net/people/+requestmerge.

-

If you have a

bugzilla.ubuntu.combug number, you can find the corresponding Launchpad bug number with the following URL:

Ubuntu Bugzilla Migration

The migration is finally going to happen, after much testing of migration code and improvements to Malone.

If all goes well, Ubuntu will be using a single bug tracker again on

Friday (as opposed to the current system where bugs in main go in

Bugzilla and bugs in universe go in Malone).

Comments:

Keshav -

Hiiii,

I am Keshav and i am 22. I am working as software dev.engineer in Software Company . I am currently working on Bugzilla. I think i can get some help in understanding how i can migrate bugzilla . Can you provide me the tips and list the actions so that i can come close in making a effective migration functionality

Switch users from XScreenSaver

Joao: you can

configure XScreenSaver to show a "Switch User" button in it's

password dialog (which calls gdmflexiserver when run). This lets you

start a new X session after the screen has locked. This feature is

turned on in Ubuntu if you want to try it out.

Of course, this is not a full solution, since it doesn't help you switch to an existing session (you'd need to guess the correct Ctrl+Alt+Fn combo). There is code in gnome-screensaver to support this though, giving you a list of sessions you can switch to.

Moving from Bugzilla to Launchpad

One of the things that was discussed at

UBZ was moving Ubuntu's bug

tracking over to Launchpad. The current

situation sees bugs in main being filed in

bugzilla while bugs in universe go in

Launchpad. Putting all the bugs in Launchpad is an improvement, since

users only need to go to one system to file bugs.

I wrote the majority of the conversion script before the conference, but made a few important improvements at the conference after discussions with some of the developers. Since the bug tracking system is probably of interest to people who weren't at the conf, I'll outline some of the details of the conversion below:

Avahi on Breezy followup

So after I posted some instructions for setting up Avahi on Breezy, a fair number of people at UBZ did so. For most people this worked fine, but it seems that a few people's systems started spewing a lot of network traffic.

It turns out that the problem was actually caused by the

zeroconf package

(which I did not suggest installing) rather than Avahi. The zeroconf

package is not needed for service discovery or .local name lookup, so

if you are at UBZ you should remove the package or suffer the wrath of

Elmo.

Avahi on Breezy

During conferences, it is often useful to be able to connect to connect to other people's machines (e.g. for collaborative editing sessions with Gobby). This is a place where mDNS hostname resolution can come in handy, so you don't need to remember IP addresses.

This is quite easy to set up on Breezy:

- Install the

avahi-daemon,avahi-utilsandlibnss-mdnspackages from universe. - Restart dbus in order for the new system bus security policies to

take effect with "

sudo invoke-rc.d dbus restart". - Start

avahi-daemonwith "sudo invoke-rc.d avahi-daemon start". - Edit

/etc/nsswitch.conf, and add "mdns" to the end of the "hosts:" line.

Now your hostname should be advertised to the local network, and you can

connect to other hosts by name (of the form hostname.local). You can

also get a list of the currently advertised hosts and services with the

avahi-discover program.

Ubuntu Below Zero

I've been in Montreal since Wednesday for Ubuntu Below Zero.

As well as being my first time in Canada, it was my first time in transit through the USA. Unlike in most countries, I needed to pass through customs and get a visa waiver even though I was in transit. The visa waiver form had some pretty weird questions, such as whether I was involved in persecutions associated with Nazi Germany or its allies.

DSB-R100 USB Radio Tuner

Picked up a DSB-R100 USB Radio tuner off EBay recently. I did this partly because I have better speakers on my computer than on the radio in that room, and partly because I wanted to play around with timed recordings.

Setting it up was trivial -- the dsbr100 driver got loaded

automatically, and a program to tune the radio

(gnomeradio) was

available in the Ubuntu universe repository. I did need to change the

radio device from /dev/radio to /dev/radio0 though.

Tag: Plug

PLUG September 2022: Lightning Talks

At the Septeber 2022 PLUG meeting, we held lightning talks. I gave a short talk about recreating old video assets in HD using Inkscape and Pitivi.

PLUG June 2022: Hugo

At the June 2022 Perth Linux Users Group meeting, I gave a talk about building websites with the Hugo static website generator.

PLUG May 2021: GStreamer Editing Services

At the May 2021 Perth Linux Users Group meeting, I gave a talk about using GStreamer Editing Services to programatically construct and render videos. In particular, it outlined how the library was used to prepare BigBlueButton recordings for publication on YouTube.

PLUG July 2020: Github Actions

At the July 2020 Perth Linux Users Group meeting, I gave a talk about Github Actions: the built-in continuous integration system provided by Github.

Building IoT projects with Ubuntu Core talk

Last week I gave a talk at Perth Linux Users Group about building IoT projects using Ubuntu Core and Snapcraft. The video is now available online. Unfortunately there were some problems with the audio setup leading to some background noise in the video, but it is still intelligible:

The slides used in the talk can be found here.

PLUG March 2019: Building IoT projects with Ubuntu Core

At the March 2019 Perth Linux Users Group meeting, I gave a talk about how Ubuntu Core can be used to build IoT projects that are secure and self-updating.

PLUG April 2018: Confined Apps on the Ubuntu Desktop

At the April 2018 Perth Linux Users Group meeting, I gave a talk about the snapd package manager, and how it is used to deploy confined applications on Ubuntu desktops.

PLUG September 2016: Talking to Chromecasts

At the September 2016 Perth Linux Users Group meeting, I gave a talk about writing Chromecast sender applications from scratch. It gave a rundown of how the Chromecast protocol worked, and what sorts of things could be done on the receiver side.

PLUG October 2015: Ubuntu Snappy

At the October 2015 Perth Linux Users Group meeting, I gave a talk about the Ubuntu Snappy. This talk focused on the Ubuntu Core system as it existed back then, and looked at how applications could be deployed on the platform.

PLUG July 2014: Ubuntu Phone

At the July 2014 Perth Linux Users Group meeting, I gave a talk about the Ubuntu Touch/Ubuntu Phone project. This included an overview of getting Ubuntu running on hardware that primarily targeted Android, and how some of the design elements of the Unity Desktop were adapted to a small screen.

Tag: JavaScript

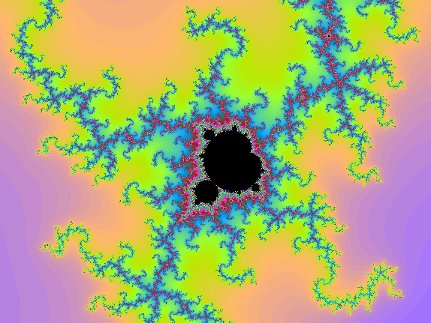

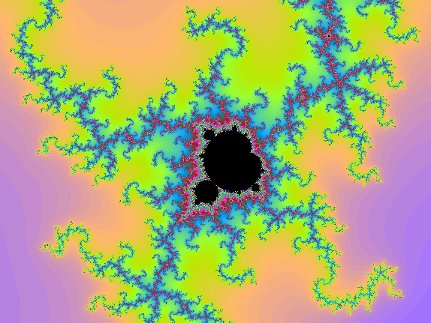

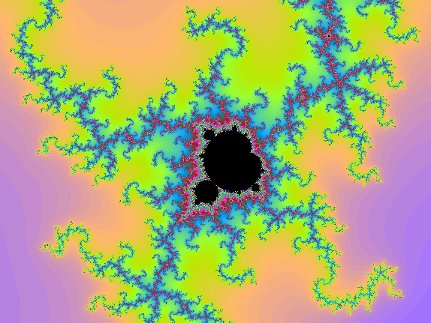

Improved JS Mandelbrot Renderer

Eleven years ago, I wrote a Mandelbrot set generator in JavaScript as a way to test out the then somewhat new Web Workers API, allowing me to make use of multiple cores and not tie up the UI thread with the calculations.

Recently I decided to see how much I could improve it with improvements to the web stack that have happened since then. The result was much faster than what I’d managed previously:

Javascript Mandelbrot Set Fractal Renderer

While at linux.conf.au earlier this year, I started hacking on a Mandelbrot Set fractal renderer implemented in JavaScript as a way to polish my JS skills. In particular, I wanted to get to know the HTML5 Canvas and Worker APIs.

The results turned out pretty well. Click on the image below to try it out:

Clicking anywhere on the fractal will zoom in. You'll need to reload the page to zoom out. Zooming in while the fractal is still being rendered will interrupt the previous rendering job.

Tag: Hugo

PLUG June 2022: Hugo

At the June 2022 Perth Linux Users Group meeting, I gave a talk about building websites with the Hugo static website generator.

Tag: Gnome

Converting BigBlueButton recordings to self-contained videos

When the pandemic lock downs started, my local Linux User Group started looking at video conferencing tools we could use to continue presenting talks and other events to members. We ended up adopting BigBlueButton: as well as being Open Source, it's focus on education made it well suited for presenting talks. It has the concept of a presenter role, and built in support for slides (it sends them to viewers as images, rather than another video stream). It can also record sessions for later viewing.

Exploring Github Actions

To help keep myself honest, I wanted to set up automated test runs on a few personal projects I host on Github. At first I gave Travis a try, since a number of projects I contribute to use it, but it felt a bit clunky. When I found Github had a new CI system in beta, I signed up for the beta and was accepted a few weeks later.

While it is still in development, the configuration language feels lean and powerful. In comparison, Travis's configuration language has obviously evolved over time with some features not interacting properly (e.g. matrix expansion only working on the first job in a workflow using build stages). While I've never felt like I had a complete grasp of the Travis configuration language, the single page description of Actions configuration language feels complete.

Ubuntu Desktop

When the Ubuntu Phone project was cancelled, I moved to the desktop team. The initial goal for team was to bring up a GNOME 3 based desktop for the Ubuntu 17.10 release that would be familiar to both Ubuntu users coming from the earlier Unity desktop, and users of “vanilla” GNOME 3.

Seeking in Transcoded Streams with Rygel

When looking at various UPnP media servers, one of the features I wanted was the ability to play back my music collection through my PlayStation 3. The complicating factor is that most of my collection is encoded in Vorbis format, which is not yet supported by the PS3 (at this point, it doesn't seem likely that it ever will).

Both MediaTomb and Rygel could handle this to an extent, transcoding the audio to raw LPCM data to send over the network. This doesn't require much CPU power on the server side, and only requires 1.4 Mbit/s of bandwidth, which is manageable on most home networks. Unfortunately the only playback controls enabled in this mode are play and stop: if you want to pause, fast forward or rewind then you're out of luck.

Watching iView with Rygel

One of the features of Rygel that I found most interesting was the external media server support. It looked like an easy way to publish information on the network without implementing a full UPnP/DLNA media server (i.e. handling the UPnP multicast traffic, transcoding to a format that the remote system can handle, etc).

As a small test, I put together a server that exposes the ABC's iView service to UPnP media renderers. The result is a bit rough around the edges, but the basic functionality works. The source can be grabbed using Bazaar:

More Rygel testing

In my last post, I said I had trouble getting Rygel's tracker backend to function and assumed that it was expecting an older version of the API. It turns out I was incorrect and the problem was due in part to Ubuntu specific changes to the Tracker package and the unusual way Rygel was trying to talk to Tracker.

The Tracker packages in Ubuntu remove the D-Bus service activation file for the "org.freedesktop.Tracker" bus name so that if the user has not chosen to run the service (or has killed it), it won't be automatically activated. Unfortunately, instead of just calling a Tracker D-Bus method, Rygel was trying to manually activate Tracker via a StartServiceByName() call. This would fail even if Tracker was running, hence my assumption that it was a tracker API version problem.

Ubuntu packages for Rygel

I promised Zeeshan that I'd have a look at his Rygel UPnP Media Server a few months back, and finally got around to doing so. For anyone else who wants to give it a shot, I've put together some Ubuntu packages for Jaunty and Karmic in a PPA here:

Most of the packages there are just rebuilds or version updates of existing packages, but the Rygel ones were done from scratch. It is the first Debian package I've put together from scratch and it wasn't as difficult as I thought it might be. The tips from the "Teach me packaging" workshop at the Canonical All Hands meeting last month were quite helpful.

Sansa Fuze

On my way back from Canada a few weeks ago, I picked up a SanDisk Sansa Fuze media player. Overall, I like it. It supports Vorbis and FLAC audio out of the box, has a decent amount of on board storage (8GB) and can be expanded with a MicroSDHC card. It does use a proprietary dock connector for data transfer and charging, but that's about all I don't like about it. The choice of accessories for this connector is underwhelming, so a standard mini-USB connector would have been preferable since I wouldn't need as many cables.

PulseAudio

It seems to be a fashionable to blog about experiences with PulseAudio, I thought I'd join in.

I've actually had some good experiences with PulseAudio, seeing some tangible benefits over the ALSA setup I was using before. I've got a cheapish surround sound speaker set connected to my desktop. While it gives pretty good sound when all the speakers are used together, it sounds like crap if only the front left/right speakers are used.

Using Twisted Deferred objects with gio

The gio library provides both synchronous and asynchronous interfaces for performing IO. Unfortunately, the two APIs require quite different programming styles, making it difficult to convert code written to the simpler synchronous API to the asynchronous one.

For C programs this is unavoidable, but for Python we should be able to do better. And if you're doing asynchronous event driven code in Python, it makes sense to look at Twisted. In particular, Twisted's Deferred objects can be quite helpful.

Metrics for success of a DVCS

One thing that has been mentioned in the GNOME DVCS debate was that it is as easy to do "git diff" as it is to do "svn diff" so the learning curve issue is moot. I'd have to disagree here.

Traditional Centralised Version Control

With traditional version control systems (e.g. CVS and Subversion) as used by Free Software projects like GNOME, there are effectively two classes of users that I will refer to as "committers" and "patch contributors":

DVCS talks at GUADEC

Yesterday, a BoF was scheduled for discussion of distributed version control systems with GNOME. The BoF session did not end up really discussing the issues of what GNOME needs out of a revision control system, and some of the examples Federico used were a bit snarky.

We had a more productive meeting in the session afterwards where we went over some of the concrete goals for the system. The list from the blackboard was:

Prague

I arrived in Prague yesterday for the Ubuntu Developer Summit. Including time spent in transit in Singapore and London, the flights took about 30 hours.

As I was flying on BA, I got to experience Heathrow Terminal 5. It wasn't quite as bad as some of the horror stories I'd heard. There were definitely aspects that weren't forgiving of mistakes. For example, when taking the train to the "B" section there was a sign saying that if you accidentally got on the train when you shouldn't have it would take 40 minutes to get back to the "A" section.

Inkscape Migrated to Launchpad

Yesterday I performed the migration of Inkscape's bugs from SourceForge.net to Launchpad. This was a full import of all their historic bug data – about 6900 bugs.

As the import only had access to the SF user names for bug reporters,

commenters and assignees, it was not possible to link them up to

existing Launchpad users in most cases. This means that duplicate person

objects have been created with email addresses like

$USERNAME@users.sourceforge.net.

Weird GNOME Power Manager error message

Since upgrading to Ubuntu Gutsy I've occasionally been seeing the following notification from GNOME Power Manager:

I'd usually trigger this error by unplugging the AC adapter and then picking suspend from GPM's left click menu.

My first thought on seeing this was "What's a policy timeout, and why

is it not valid?" followed by "I don't remember setting a policy

timeout". Looking at bug

492132 I found a

pointer to the policy_suppression_timeout gconf value, whose

description gives a bit more information.

gnome-vfs-obexftp 0.4

It hasn't been long since the last gnome-vfs-obexftp release, but I thought it'd be good to get these fixes out before undertaking more invasive development. The new version is available from:

The highlights of this release are:

- If the phone does not provide free space values in the OBEX capability object, do not report this as zero free space. This fixes Nautilus file copy behaviour on a number of Sony Ericsson phones.

- Fix date parsing when the phone returns UTC timestamps in the folder listings.

- Add some tests for the capability object and folder listing XML parsers. Currently has sample data for Nokia 6230, Motorola KRZR K1, and Sony K800i, Z530i and Z710i phones.

These fixes should improve the user experience for owners of some Sony Ericsson phones by letting them copy files to the phone, rather than Nautilus just telling them that there is no free space. Unfortunately, if there isn't enough free space you'll get an error part way through the copy. This is the best that can be done with the information provided by the phone.

Investigating OBEX over USB

I've had a number of requests for USB support in gnome-vfs-obexftp. At

first I didn't have much luck talking to my phone via USB. Running the

obex_test utility from OpenOBEX gave the following results:

$ obex_test -u

Using USB transport, querying available interfaces

Interface 0: (null)

Interface 1: (null)

Interface 2: (null)

Use 'obex_test -u interface_number' to run interactive OBEX test client

Trying to talk via any of these interface numbers failed. After reading

up a bit, it turned out that I needed to add a udev rule to give

permissions on my phone. After doing so, I got a better result:

TXT records in mDNS

Havoc: for a lot of services advertised via mDNS, the client doesn't have the option of ignoring TXT records if it wants to behave correctly.

For example, the Bonjour Printing Specification puts the underlying print queue name in a TXT record (as multiple printers might be advertised by a single print server). While it says that the server can omit the queue name (in which case the default queue name "auto" is used), a client is not going to be able to do what the user asked without checking for the presence of the record.

gnome-vfs-obexftp 0.3

I've just released a new version of gnome-vfs-obexftp, which includes the features discussed previously. It can be downloaded from:

The highlights of the release include:

- Sync osso-gwobex and osso-gnome-vfs-extras changes from Maemo Subversion.

- Instead of asking hcid to set up the RFCOMM device for communication, use an RFCOMM socket directly. This is both faster and doesn't require enabling experimental hcid interfaces. Based on work from Bastien Nocera.

- Improve free space calculation for Nokia phones with multiple memory types (e.g. phone memory and a memory card). Now the free space for the correct memory type for a given directory should be returned. This fixes various free-space dependent operations in Nautilus such as copying files.

Any bug reports should be filed in Launchpad at:

Stupid Patent Application

I recently received a bug report about the free space calculation in gnome-vfs-obexftp. At the moment, the code exposes a single free space value for the OBEX connection. However, some phones expose multiple volumes via the virtual file system presented via OBEX.

It turns out my own phone does this, which was useful for testing. The

Nokia 6230 can store things on the phone’s memory (named DEV in the

OBEX capabilities list), or the Multimedia Card (named MMC). So the

fix would be to show the DEV free space when browsing folders on DEV

and the MMC free space when browsing folders on MMC.

FM Radio in Rhythmbox – The Code

Previously, I posted about the FM radio plugin I was working on. I just posted the code to bug 168735. A few notes about the implementation:

- The code only supports Video4Linux 2 radio tuners (since that’s the interface my device supports, and the V4L1 compatibility layer doesn’t work for it). It should be possible to port it support both protocols if someone is interested.

- It does not pass the audio through the GStreamer pipeline. Instead, you need to configure your mixer settings to pass the audio through (e.g. unmute the Line-in source and set the volume appropriately). It plugs in a GStreamer source that generates silence to work with the rest of the Rhythmbox infrastructure. This does mean that the volume control and visualisations won’t work

- No properties dialog yet. If you want to set titles on the stations,

you’ll need to edit

rhythmdb.xmldirectly at the moment. - The code assumes that the radio device is

/dev/radio0.

Other than that, it all works quite well (I've been using it for the last few weeks).

FM Radio in Rhythmbox

I've been working on some FM radio support in Rhythmbox in my spare time. Below is screenshot

At the moment, the basic tuning and mute/unmute works fine with my

DSB-R100. I don't have any UI for adding/removing stations at the

moment though, so it is necessary to edit

~/.gnome2/rhythmbox/rhythmdb.xml to add them.

Comments:

Joel -

This feature would truly be a welcome addition!

I'm especially pleased it's being developed by a fellow Australian! (If the radio stations are any indication)

FM Radio Tuners in Feisty

I upgraded to Feisty about a month or so ago, and it has been a nice improvement so far. One regression I noticed though was that my USB FM radio tuner had stopped working (or at least, Gnomeradio could no longer tune it).

It turns out that some time between the kernel release found in Edgy and

the one found in Feisty, the dsbr100 driver had been upgraded from the

Video4Linux 1

API to

Video4Linux

2. Now the

driver nominally supports the V4L1 ioctls through the v4l1_compat, but

it doesn't seem to implement enough V4L2 ioctls to make it usable (the

VIDIOCGAUDIO ioctl fails).

ZeroConf support for Bazaar

When at conferences and sprints, I often want to see what someone else is working on, or to let other people see what I am working on. Usually we end up pushing up to a shared server and using that as a way to exchange branches. However, this can be quite frustrating when competing for outside bandwidth when at a conference.

It is possible to share the branch from a local web server, but that still means you need to work out the addressing issues.

gnome-vfs-obexftp 0.1 released

I put out a tarball release of gnome-vfs-obexftp here:

This includes a number of fixes since the work I did in October:

- Fix up some error handling in the dbus code.

- Mark files under the

obex:///virtual root as being local. This causes Nautilus to process the desktop entries and give us nice icons. - Ship a copy of

osso-gwobex, built statically into the VFS module. This removes the need to install another shared library only used by one application.

As well as the standard Gnome and D-BUS libraries, you will need

OpenOBEX >= 1.2 and Bluez-Utils >= 3.7. The hcid daemon must be

started with the -x flag to enable the experimental D-BUS interfaces

used by the VFS module. You will also need a phone or other device that

supports OBEX FTP :)

UTC+9

Daylight saving started yesterday: the first time since 1991/1992 summer

for Western Australia. The legislation finally passed the upper house on

21st November (12 days before the transition date). The updated

tzdata packages were released on 27th

November (6 days before the transition). So far, there hasn't been an

updated package released for Ubuntu (see bug

72125).

One thing brought up in the Launchpad bug was that not all applications

used the system /usr/share/zoneinfo time zone database. So other

places that might need updating include:

Building obex-method

I published a Bazaar branch of the Nautilus obex method here:

http://bazaar.launchpad.net/~jamesh/+junk/gnome-vfs-obexftp

This version works with the hcid daemon included with Ubuntu Edgy,

rather than requiring the btcond daemon from

Maemo.

Some simple instructions on building it:

-

Download and build the

osso-gwobexlibrary:svn checkout https://stage.maemo.org/svn/maemo/projects/connectivity/osso-gwobex/trunk osso-gwobexThe debian/ directory should work fine to build a package using

debuild. -

Download and build the obex module:

bzr branch http://bazaar.launchpad.net/~jamesh/+junk/gnome-vfs-obexftpThere is no debian packaging for this — just an

autogen.shscript.

Playing Around With the Bluez D-BUS Interface

In my previous entry about using the

Maemo obex-module on the desktop, Johan Hedberg mentioned that

bluez-utils 3.7 included equivalent interfaces to the

osso-gwconnect daemon used by the method. Since then, the copy of

bluez-utils in Edgy has been updated to 3.7, and the necessary

interfaces are enabled in hcid by default.

Before trying to modify the VFS code, I thought I'd experiment a bit

with the D-BUS interfaces via the D-BUS python bindings. Most of the

interesting method calls exist on the org.bluez.Adapter interface. We

can easily get the default adapter with the following code:

OBEX in Nautilus

When I got my new laptop, one of the features it had that my previous one didn't was Bluetooth support. There are a few Bluetooth related utilities for Gnome that let you send and receive SMS messages and a few other things, but a big missing feature is the ability to transfer files to and from the phone easily.

Ideally, I'd be able to browse the phone's file system using Nautilus. Luckily, the Maemo guys have already done the hard work of writing a gnome-vfs module that speaks the OBEX FTP protocol. I had a go at compiling it on my laptop (running Ubuntu Edgy), and you can see the result below:

Gnome-gpg 0.5.0 Released

Over the weekend, I released gnome-gpg

0.5.0.

The main features in this release is support for running without

gnome-keyring-daemon (of course, you can't save the passphrase

in this mode), and to use the same keyring item name for the passphrase

as Seahorse. The release can be

downloaded here:

I also switched over from Arch to

Bazaar. The conversion was fairly painless

using bzr baz-import-branch, and means that I have both my

revisions and Colins revisions in a single tree. The branch can be

pulled from:

Vote Counting and Board Expansion

Recently one of the Gnome Foundation directors quit, and there has been a proposal to expand the board by 2 members. In both cases, the proposed new members have been taken from the list of candidates who did not get seats in the last election from highest vote getter down.

While at first this sounds sensible, the voting system we use doesn't provide a way of finding out who would have been selected for the board if a particular candidate was removed from the ballot.

JHBuild Updates

The progress on JHBuild has continued (although I haven't done much in the last week or so). Frederic Peters of JhAutobuild fame now has a CVS account to maintain the client portion of that project in tree.

Perl Modules (#342638)

One of the other things that Frederic has been working on is support for

building Perl modules (which use a Makefile.PL instead of a configure

script). His initial patchworked fine for tarballs, but by switching

over to the new generic version control code in jhbuild it was possible

to support Perl modules maintained in any of the supported version

control systems without extra effort.

JHBuild Improvements

I've been doing most JHBuild development in my bzr branch recently. If you have bzr 0.8rc1 installed, you can grab it here:

bzr branch http://www.gnome.org/~jamesh/bzr/jhbuild/jhbuild.dev

I've been keeping a regular CVS import going at

http://www.gnome.org/~jamesh/bzr/jhbuild/jhbuild.cvs using Tailor, so

changes people make to module sets in CVS make there way into the bzr

branch. I've used a small hack so that merges back into CVS get

recorded correctly in the jhbuild.cvs branch:

intltool and po/LINGUAS

Rodney: my

suggestions for intltool were not intended as an attack. I just don't

really see much benefit in intltool providing its own

po/Makefile.in.in file.

The primary difference between the intltool po/Makefile.in.in and the

version provided by gettext or glib is that it calls intltool-update

rather than xgettext to update the PO template, so that strings get

correctly extracted from files types like desktop entries, Bonobo

component registration files, or various other XML files.

Ekiga

I've been testing out Ekiga recently, and so far the experience has been a bit hit and miss.

- Firewall traversal has been unreliable. Some numbers (like the SIPPhone echo test) work great. In some cases, no traffic has gotten through (where both parties were behind Linux firewalls). In other cases, voice gets through in one direction but not the other. Robert Collins has some instructions on setting up siproxd which might solve all this though, so I'll have to try that.

- The default display for the main window is a URI entry box and a dial pad. It would make much more sense to display the user's list of contacts here instead (which are currently in a separate window). I rarely enter phone numbers on my mobile phone, instead using the address book. I expect that most VoIP users would be the same, provided that using the address book is convenient.

- Related to the previous point: the Ekiga.net registration service seems to know who is online and who is not. It would be nice if this information could be displayed next to the contacts.

- Ekiga supports multiple sound cards. It was a simple matter of selecting "Logitech USB Headset" as the input and output device on the audio devices page of the preferences to get it to use my headset. Now I hear the ring on my desktop's speakers, but can use the headset for calls.

- It is cool that Ekiga supports video calls, but I have no video camera on my computer. Even though I disabled video support in the preferences, there is still a lot of knobs and whistles in the UI related to video.

Even though there are still a few warts, Ekiga shows a lot of promise. As more organisations provide SIP gateways become available (such as the UWA gateway), this software will become more important as a way of avoiding expensive phone charges as well as a way of talking to friends/colleagues.

Annoying Firefox Bug

Ran into an annoying Firefox bug after upgrading to Ubuntu Dapper. It seems to affect rendering of ligatures.

At this point, I am not sure if it is an Ubuntu specific bug. The current conditions I know of to trigger the bug are:

- Firefox 1.5 (I am using the 1.5.dfsg+1.5.0.1-1ubuntu10 package).

- Pango rendering enabled (the default for Ubuntu).

- The web page must use a font that contains ligatures and use those ligatures. Since the "DejaVu Sans" includes ligatures and is the default "sans serif" font in Dapper, this is true for a lot of websites.

- The text must be justified (e.g. use the "

text-align: justify" CSS rule).

If you view a site where these conditions are met with an affected

Firefox build, you will see the bug: ligature glyphs will be used to

render character sequences like "ffi", but only the advance of the

first character's normal glyph is used before drawing the next glyph.

This results in overlapping glyphs:

Re: Lazy loading

Emmanuel: if you are using a language like Python, you can let the language keep track of your state machine for something like that:

def load_items(treeview, liststore, items):

for obj in items:

liststore.append((obj.get_foo(),

obj.get_bar(),

obj.get_baz()))

yield True

treeview.set_model(liststore)

yield False

def lazy_load_items(treeview, liststore, items):

gobject.idle_add(load_items(treeview, liststore, item).next)

Here, load_items() is a generator that will iterate over a sequence

like [True, True, ..., True, False]. The next() method is used to

get the next value from the iterator. When used as an idle function

with this particular generator, it results in one item being added to

the list store per idle call til we get to the end of the generator

body where the "yield False" statement results in the idle

function being removed.

Gnome Logo on Slashdot

Recently, Jeff brought up the issue of the use of the old Gnome logo on Slashdot. The reasoning being that since we decided to switch to the new logo as our mark back in 2002, it would be nice if they used that mark to represent stories about us.

Unfortunately this request was shot down by Rob Malda, because the logo is "either ugly or B&W (read:Dull)".

Not to be discouraged, I had a go at revamping the logo to meet Slashdot's high standards. After all, if they were going to switch to the new logo, they would have done so when we first asked. The result is below:

Gnome-gpg 0.4.0 Released

I put out a new release of gnome-gpg containing the fixes I mentioned previously.

The internal changes are fairly extensive, but the user interface remains pretty much the same. The main differences are:

- If you enter an incorrect passphrase, the password prompt will be displayed again, the same as when gpg is invoked normally.

- If an incorrect passphrase is stored in the keyring (e.g. if you

changed your key's passphrase), the passphrase prompt will be

displayed. Previously you would need to use the

--forget-passphraseoption to tell gnome-gpg to ignore the passphrase in the keyring. - The passphrase dialog is now set as a transient for the terminal that spawned it, using the same algorithm as zenity. This means that the passphrase dialog pops up on the same workspace as the terminal, and can't be obscured by the terminal.

Comments:

Marius Gedminas -

Any ideas how to use it with Mutt?

Using Tailor to Convert a Gnome CVS Module

In my previous post, I mentioned using Tailor to import jhbuild into a Bazaar-NG branch. In case anyone else is interested in doing the same, here are the steps I used:

1. Install the tools

First create a working directory to perform the import, and set up tailor. I currently use the nightly snapshots of bzr, which did not work with Tailor, so I also grabbed bzr-0.7:

$ wget http://darcs.arstecnica.it/tailor-0.9.20.tar.gz

$ wget http://www.bazaar-ng.org/pkg/bzr-0.7.tar.gz

$ tar xzf tailor-0.9.20.tar.gz

$ tar xzf bzr-0.7.tar.gz

$ ln -s ../bzr-0.7/bzrlib tailor-0.9.20/bzrlib

2. Prepare a local CVS Repository to import from

Revision Control Migration and History Corruption

As most people probably know, the Gnome project is planning a migration

to Subversion. In contrast, I've

decided to move development of jhbuild over to

bzr. This decision is a bit easier for

me than for other Gnome modules because:

- No need to coordinate with GDP or GTP, since I maintain the docs and there is no translations.

- Outside of the moduleset definitions, the large majority of development and commits are done by me.

- There aren't really any interesting branches other than the mainline.

I plan to leave the Gnome module set definitions in CVS/Subversion though, since many people help in keeping them up to date, so leaving them there has some value.

gnome-gpg improvement

The gnome-gpg utility makes PGP a bit nicer to use on Gnome with the following features:

- Present a Gnome password entry dialog for passphrase entry.

- Allow the user to store the passphrase in the session or permanent keyring, so it can be provided automatically next time.

Unfortunately there are a few usability issues:

- The anonymous/authenticated user radio buttons are displayed in the password entry dialog, while they aren't needed.

- The passphrase is prompted for even if

gpgdoes not require it to complete the operation. - If the passphrase is entered incorrectly, the user is not prompted

for it again like they would be with plain

gpg. - If an incorrect passphrase is provided by

gnome-keyring-daemon, you need to remove the item usinggnome-keyring-manageror use the--force-passphrasecommand line argument.

I put together a patch to fix these issues by using gpg's

--status-fd/--command-fd interface. Since this provides status

information to gnome-gpg, it means it knows when to prompt for and

send the passphrase, and when it gave the wrong passphrase.

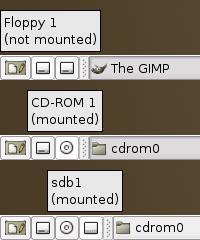

Drive Mount Applet (again)

Thomas: that behaviour looks like a bug. Are all of those volumes mountable by the user? The drive mount applet is only meant to show icons for the mount points the user can mount.

Note also that the applet is using the exact same information for the list of drives as Nautilus is. If the applet is confusing, then wouldn't Nautilus's "Computer" window also be confusing?

To help debug things, I wrote a little program to dump all the data

provided by GnomeVFSVolumeMonitor:

Preferences for the Drive Mount Applet

In my previous article, I outlined the thought process behind the redesign of the drive mount applet. Although it ended up without any preferences, I don't necessarily think that it doesn't need any preferences.

A number of people commented on the last entry requesting a particular preference: the ability to hide certain drives in the drive list. Some of the options include:

- Let the user select which individual drives to display

- Let the user select which classes of drive to display (floppy, cdrom, camera, music player, etc).

- Select whether to display drives only when they are mounted, or only when they are mountable (this applies to drives which contain removable media).

Of these choices, the first is probably the simplest to understand, so might be the best choice. It could be represented in the UI as a list of the available drives with a checkbox next to each. In order to not hide new drives by default, it would probably be best to maintain a list of drives to hide rather than drives to show.

Features vs. Preferences

As most people know, there has been some flamewars accusing Gnome developers of removing options for the benefit of "idiot users". I've definitely been responsible for removing preferences from some parts of the desktop in the past. Probably the most dramatic is the drive mount applet, which started off with a preferences dialog with the following options:

- Mount point: which mount point should the icon watch the state of?

- Update interval: at what frequency should the mount point be polled to check its status?

- Icon: what icon should be used to represent this mount point. A selection of various drive type icons were provided for things like CDs, Floppys, Zip disks, etc.

- Mounted Icon and Unmounted Icon: if "custom" was selected for the above, let the user pick custom image files to display the two states.

- Eject disk when unmounted: whether to attempt to eject the disk when the unmount command is issued.

- Use automount-friendly status test: whether to use a status check that wouldn't cause an automounter to mount the volume in question.

These options (and the applet in general) survived pretty much intact from the Gnome 1.x days. However the rest of Gnome (and the way people use computers in general) had moved forward since then, so it seemed sensible to rethink the preferences provided by the applet:

Re: Pixmap Memory Usage

Glynn: I suspect that the Pixmap memory usage has something to do with image rendering rather than applets in particular doing something stupid. Notice that most other GTK programs seem to be using similar amounts of pixmap memory.

To help test this hypothesis, I used the following Python program:

import gobject, gtk

win = gtk.Window()

win.set_title('Test')

win.connect('destroy', lambda w: gtk.main_quit())

def add_image():

img = gtk.image_new_from_stock(gtk.STOCK_CLOSE,

gtk.ICON_SIZE_BUTTON)

win.add(img)

img.show()

gobject.timeout_add(30000, add_image)

win.show()

gtk.main()

According to xrestop, this program has low pixmap memory usage when

it starts, but jumps up to similar levels to the other apps after 30

seconds.

Switch users from XScreenSaver

Joao: you can

configure XScreenSaver to show a "Switch User" button in it's

password dialog (which calls gdmflexiserver when run). This lets you

start a new X session after the screen has locked. This feature is

turned on in Ubuntu if you want to try it out.

Of course, this is not a full solution, since it doesn't help you switch to an existing session (you'd need to guess the correct Ctrl+Alt+Fn combo). There is code in gnome-screensaver to support this though, giving you a list of sessions you can switch to.

DSB-R100 USB Radio Tuner

Picked up a DSB-R100 USB Radio tuner off EBay recently. I did this partly because I have better speakers on my computer than on the radio in that room, and partly because I wanted to play around with timed recordings.

Setting it up was trivial -- the dsbr100 driver got loaded

automatically, and a program to tune the radio

(gnomeradio) was

available in the Ubuntu universe repository. I did need to change the

radio device from /dev/radio to /dev/radio0 though.

Playing with Google Maps API

I finally got round to playing with the Google Maps API, and the results can be seen here. I took data from the GnomeWorldWide wiki page and merged in some information from the Planet Gnome FOAF file (which now includes the nicknames and hackergotchis).

The code is available here (a BZR branch, but you can easily download the latest versions of the files directly). The code works roughly as follows:

HTTP resource watcher

I've got most of the features of my HTTP resource watching code I was working on for GWeather done. The main benefits over the existing gnome-vfs based code are:

- Simpler API. Just connect to the

updatedsignal on the resource object, and you get notified when the resource changes. - Supports

gzipanddeflatecontent encodings, to reduce bandwidth usage. - Keeps track of

Last-Modifieddate andEtagvalue for the resource so that it can do conditionalGETs of the resource for simple client side caching. - Supports the

Expiresheader. If the update interval is set at 30 minutes but the web server says that the it won't be updated for an hour, then use the longer timeout til the next check. - If a permanent redirect is received, then the new URI is used for future checks.

- If a

410 Goneresponse is received, then future checks are not queued (they can be restarted with arefresh()call).

I've also got some code to watch the HTTP proxy settings in GConf, but that seems to trigger a hang in libsoup (bug 309867).

Bryan's Bazaar Tutorial

Bryan: there are a number of steps you can skip in your little tutorial:

-

You don't need to set

my-default-archive. If you often work with multiple archives, you can treat working copies for all archives pretty much the same. If you are currently inside a working copy, any branch names you use will be relative to your current one, so you can still use short branch names in almost all cases (this is similar to the reason I don't set$CVSROOTwhen working with CVS).

HTTP code in GWeather

One of the things that pisses me off about gweather is that it

occasionally hangs and stops updating. It is a bit easier to tell when

this has occurred these days, since it is quite obvious something's

wrong if gweather thinks it is night time when it clearly isn't.

The current code uses gnome-vfs, which isn't the best choice for this

sort of thing. The code is the usual mess you get when turning an

algoithm inside out to work through callbacks in C:

pkg-config patches

I uploaded a few patches to the pkg-config bugzilla recently, which will hopefully make their way into the next release.

The first is related to bug 3097, which has to do with the broken dependent library elimination code added to 0.17.

The patch adds a Requires.private field to .pc files that contains a

list of required packages like Requires currently does, which has the

following properties:

Clipboard Handling

Phillip: your idea about direct client to client clipboard transfers is doable with the current X11 clipboard model:

- Clipboard owner advertises that it can convert selection to some special target type such as "client-to-client-transfer" or similar.

- If the pasting client supports client to client transfer, it can check the list of supported targets for the "client-to-client-transfer" target type and request conversion to that target.

- The clipboard owner returns a string containing details of how to request the data (e.g. hostname/port, or some other scheme that only works for the local host).

- Pasting application contacts the owner out of band and receives the data.

Yes, this requires modifications to applications in order to work correctly, but so would switching to a new clipboard architecture.

Anonymous voting

I put up a proposal for implementing anonymous voting for the foundation elections on the wiki. This is based in part on David's earlier proposal, but simplifies some things based on the discussion on the list and fleshes out the implementation a bit more.

It doesn't really add to the security of the elections process (doing so would require a stronger form of authentication than "can read a particular email account"), but does anonymise the election results and lets us do things like tell the voter that their completed ballot was malformed on submission.

Clipboard Manager

Phillip: